You know, working with AI workflows can sometimes feel like trying to untangle a knotted necklace—just when you think you’ve got it straightened out, another snag appears. I’ve been there, staring at error messages and wondering why my tools suddenly decided not to talk to each other. It’s messy, frustrating, and honestly a little humbling. But, hey, that’s part of the journey, right?

API Connection Breakdowns: Why Can’t My Tools Talk?

Let me tell you, the number of times I’ve lost hours because of flaky API connections is borderline comical—if it weren’t so maddening. Sometimes, it’s a tiny timeout issue that feels disproportionate to the problem it creates. Other times, the API updates without warning and suddenly your seamless integration turns into a cryptic “404 not found” nightmare.

One time, I had an API key expire—classic rookie move not checking details on time—and for a good afternoon, my workflow was basically just spinning wheels with no progress. It’s the kind of thing that makes you want to throw your laptop out the window (don’t worry, I didn’t). So if I could give one piece of advice here, it’s always keep an eye on those API credentials and version changes. A little preventive housekeeping saves a lot of late-night headaches.

Prompt Engineering Pitfalls: When Your Instructions Go Astray

Okay, this one’s almost an art form, isn’t it? Getting your AI to understand exactly what you mean can feel like trying to explain a punchline to someone who just doesn’t get your sense of humor. I remember crafting what I thought was a crystal-clear prompt—only to get bizarre, off-the-wall responses that made me question if I was even speaking the same language as the model.

Turns out, prompt wording matters way more than I initially believed. Even the slightest update—like changing “summarize” to “explain briefly”—can completely shift the output. It’s a bit like tuning a radio; sometimes you need a gentle nudge to get the signal just right. And oh, the joy when it finally clicks! But yeah, expect some trial and error here.

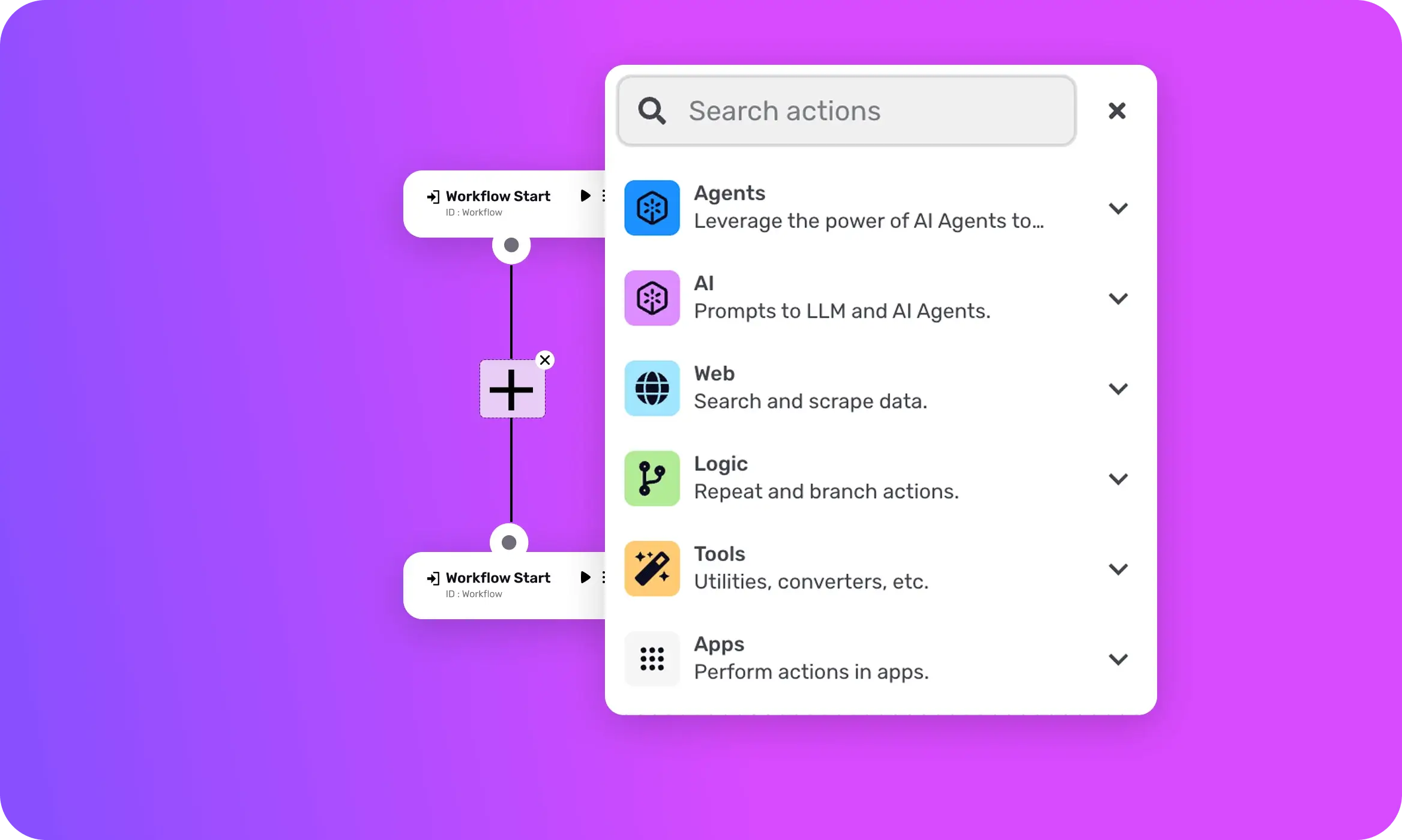

Bonus: What Is AI Workflow Automation and How Does It Work in Business?

Data Mismatches and Formatting Fiascos

Here’s something that’s definitely tripped me up: the maddening mismatch between data formats. Imagine feeding your AI a neat CSV file, only to realize halfway that one column’s dates are in “MM-DD-YYYY” while another’s stubbornly stuck in “DD/MM/YYYY.” That sinking feeling? Yeah, I know it well. It derails your entire pipeline unless caught early.

And don’t get me started on encoding issues—like when some characters mysteriously turn into gibberish. I’ve spent way too long hunting down whether it’s a UTF-8 thing or just me being careless. Honestly, cleaning and standardizing data feels like a never-ending chore but it’s absolutely vital. A misformatted dataset can send your model down rabbit holes you didn’t know existed.

The Black Box Blues: Decoding Opaque Model Errors

This is probably the most mystifying part. Sometimes your model gives you an error, but the explanation is as clear as mud—usually just some cryptic code or a vague comment in the logs. I’ve lost count of how often I wished AI came with a friendly “I’m sorry, here’s what went wrong” note.

What’s frustrating is that these black box errors keep you guessing, and that uncertainty is stressful. I remember late nights poking around debug tools, trying every possible fix like a detective on a case that won’t close. Actually, let me rephrase that—sometimes it feels like the model is deliberately playing hide-and-seek. But with persistence (and a fair amount of caffeine), patterns start to emerge. And that’s when things begin to make sense again.

So, yes, these common pitfalls are part and parcel of managing AI workflows. They can infuriate you, confuse you, and sometimes even break your spirit a little. But they also sharpen your skills and keep you humble—which, if you ask me, is a decent trade-off. And honestly, when you finally solve one of these issues, it feels like winning a small but very real battle.

Community to the Rescue: How Others Are Solving AI Headaches

You know, working with AI can sometimes feel like you’re elbow-deep in spaghetti code, trying to untangle a mess nobody warned you about. That sinking feeling when your model just won’t train right, or your prompt produces nonsense… Well, that’s where the community swoops in like a lifesaver. Honestly, I think having a community is one of the best ways to smooth out those rough edges AI throws at you.

Active Discords and Forums: Real-Time Troubleshooting

I’ve lost count of how many times hopping onto a lively Discord server saved my project. These spaces are buzzing 24/7 with folks who are knee-deep in the same challenges, sharing fixes as soon as problems pop up. There’s something reassuring about knowing you’re not alone when your code crashes at 2 a.m.—and people actually respond (sometimes within minutes!).

Forums too—Reddit subs, Stack Overflow threads—offer a treasure trove of wisdom from people who’ve been there, done that. I remember struggling with a weird API glitch for hours until a stranger’s comment casually mentioned a patch I never heard about. That’s the beauty of these real-time communities: constant updates and quick help.

Shared Templates and Blueprints: Standing on the Shoulders of Giants

One of my favorite things about the AI space is how generous people are with their templates. Whether it’s a prompt template for a chatbot or a model architecture blueprint, sharing these resources can save you days—sometimes weeks—of trial and error. I mean, why reinvent the wheel when someone’s already built a shiny, well-oiled one?

These shared templates are more than just starting points; they’re like cheat codes that help you focus on your unique twist instead of the basics everyone has to figure out. Frankly, I’ve stolen—er, borrowed—a few myself. And there’s zero shame in that because the collective knowledge accelerates everyone’s progress.

Learning from Real-World Examples: Case Studies and Success Stories

Ever find motivation slipping away when your AI experiments stall? Well, diving into case studies of actual projects can reignite that spark. There’s something deeply satisfying—and practical—about reading how someone else tackled a textbook classification problem or optimized recommendations for a retail app. These stories ground abstract theory into real-life wins (and missteps!).

In fact, some of the coolest ideas I’ve lifted came from dissecting success stories. It’s like eavesdropping on the behind-the-scenes drama, with all its hiccups, “aha” moments, and those classic “oops” blunders that humanize the whole process. I always recommend carving out time to explore these examples, even if you’re swamped—you’ll thank yourself later.

Open-Source Tools and Libraries: Contributing to the Collective Knowledge

Okay, this is where things get really exciting. Beyond just using what’s out there, many of us join the ranks of contributors pushing open-source AI projects forward. From simple bug fixes to launching new features, participating in these projects feels like being part of something bigger than yourself.

Let me tell you, the first time I submitted a pull request to a popular AI library, I was both nervous and thrilled (and yeah, a little scared my code would be shredded). But the feedback was constructive, and it pushed me to write cleaner code. Plus, there’s a unique satisfaction that comes with knowing your work helps tons of other folks avoid the headaches you faced.

So, when AI feels overwhelming or downright frustrating, remember: you’re backed by a global community. These spaces offer not just answers, but also empathy and encouragement. And honestly? Sometimes just knowing that others have stumbled down the same rocky path makes the journey a little less lonely.

Bonus: AI Workflows vs AI Agents — A Quick Guide for No-Code Builders

Practical Tips for Smoother AI Workflows

You know, when you first dive into building AI workflows, it’s easy to get overwhelmed by the complexity and all the moving parts. I remember staring at my screen during an early project, feeling like I was planting seeds in a storm—exciting, sure, but also pretty chaotic. Over time, I learned a few tricks that really helped smooth things out, and I want to share some practical tips that made a real difference for me.

Thorough Testing and Validation: Catching Errors Early

Oh man, let me tell you—testing isn’t just a checkbox on your to-do list. It’s your safety net. I’ve been bitten by skipping thorough tests more times than I’d like to admit. The minute you let something slip through, you get that sinking feeling watching your AI spit out weird results or, worse, crash mid-process. That’s why testing every little component—input validation, edge cases, even the oddball scenarios that feel unlikely—is crucial.

Personally, I like to think of it as proofreading a novel. You catch spelling mistakes before the book goes to print, right? Same deal here. Automated unit tests combined with manual sanity checks can save hours (or days) of headaches down the road. And hey, it doesn’t have to be scary. Start small, keep it consistent, and watch those bugs disappear—or at least get caught before they cause trouble.

Modular Design: Isolating Issues for Easier Debugging

Let’s talk architecture for a minute. When your AI workflow looks like a spaghetti bowl of tangled code, debugging becomes a nightmare. I’ve been there—trying to untangle some mysterious error only to realize it was buried deep inside a giant monolith of code. Yeah, not fun.

That’s why breaking your workflow into smaller, modular components is a game changer. Think Lego blocks—each piece does one thing well and talks to the others in a clean, predictable way. Need to fix or upgrade something? You handle just that piece, without upsetting the whole system.

Plus, modular design feels empowering. It’s like having a Swiss Army knife instead of one giant, clunky tool. You know exactly which blade to pull out when you need it.

Detailed Logging and Monitoring: Tracking Your Workflow’s Performance

This one is kind of like leaving breadcrumbs. Detailed logs and monitoring tools keep track of what’s happening inside your workflow at every step. Trust me, when something goes sideways at 2 AM (because why wouldn’t it?), you want to be able to play detective without needing a crystal ball.

I’m not just talking about error messages, but performance data, input and output snapshots, timing info—you name it. In one project, logging helped me spot a bottleneck that was slowing predictions by almost 40%, which was a huge win because it eventually saved our team hours of daily waiting time.

Of course, too much data can be overwhelming, so I recommend tailoring logs to your project’s needs. Think of it like adjusting the brightness on your phone screen—not too dim, not blinding.

Version Control: Rolling Back to Stable States

If you haven’t used version control for your AI projects, you’re missing out on a safety net that’s almost like having a time machine. Coming from a place where I once lost hours trying to revert my workflow after a shaky update, I can’t emphasize this enough.

Version control tools (you know the usual suspects like Git) let you track every change, compare different versions, and roll back to that last known stable state when things go haywire. It’s a huge stress reducer. Plus, if you work with teammates, it keeps everyone on the same page and prevents accidental overwrites—the stuff that gives you premature gray hairs.

Honestly, embracing these practical tips turned my AI projects from precarious juggling acts into more manageable (and way less stressful) workflows. Give them a try—you might feel that crisp morning air of control and clarity creeping in, too.

Bonus: What is AI Orchestration? A Clear Guide for Modern AI Workflows

Build a Debugging Mindset: Thinking Like an AI Troubleshooter

You know, debugging AI models can feel a bit like detective work in a sci-fi movie—minus the dramatic soundtrack, unfortunately. But here’s what I’ve learned after diving headfirst into AI troubleshooting: it’s not just about fixing errors; it’s about developing a mindset that welcomes curiosity, experimentation, and patience. Honestly, the biggest shifts happen when you stop seeing bugs as nuisances and start seeing them as clues—like breadcrumbs leading to deeper understanding.

Embrace Experimentation: Trial and Error as a Learning Tool

Okay, confession time—I used to get pretty frustrated with trial and error. It felt slow, like banging my head against the wall over and over. But the truth is, experimentation is essentially how you get smarter in this space. Instead of aiming for perfection on the first try, I now try to treat each “fail” as a data point. This shift changes everything. I jot down what I tried, what happened, and what I expected. Sometimes it’s a minor tweak—like adjusting a parameter by 0.05—other times it’s a bold change, like reworking a whole workflow. Either way, it’s progress.

You know what’s interesting? When you’re patient with this process, even mistakes start feeling less like setbacks and more like signposts for better ideas. Honestly, that mindset turns debugging from a chore into something a bit… dare I say it? Fun.

Breaking Down Complex Workflows: Simplifying for Clarity

Here’s a trick I keep coming back to: when an AI pipeline looks like spaghetti code after a wild night of coding, just stop and simplify. Break it into bite-sized pieces. I mean, seriously—sometimes I’ll spend an entire afternoon just mapping out a complex workflow flowchart on a whiteboard (color-coded markers in hand, feeling like a strategist). It’s kind of meditative, actually. And once you compartmentalize, it’s so much easier to spot where things might be falling apart.

For example, I once had a workflow with about eight moving parts—data preprocessing, feature extraction, model tuning, and so on. Pinpointing where an unexpected output came from felt impossible until I isolated each stage. Then it was like, aha! The problem was a missing normalization step that threw off the whole thing. Breaking things down really saved me weeks of head-scratching.

Bonus: Agentic AI is Gaining Traction in Enterprises— Here’s Why

Document Your Process: Create a Debugging Playbook

Does anyone else hate documentation as much as I used to? I’d rather wrestle with a stubborn algorithm than write notes. But here’s the kicker—documenting what you’re doing as you work is an absolute lifesaver. I started keeping a debugging playbook last year, and now it’s my go-to whenever I’m stuck or need to revisit an old project.

It doesn’t have to be fancy. I use plain text files with dates, descriptions of the problem, my hypotheses, the experiments I ran, outcomes, and next steps. Sometimes I add screenshots or plots—anything that jogs my memory later. Trust me, there’s nothing worse than that sinking feeling of trying to retrace your steps from months ago with zero clues.

Stay Curious: Continuously Learning and Adapting

Honestly, this is the heart of a debugging mindset—never settling into complacency. AI fields evolve at lightning speed. What worked last year might not cut it today. I try to read a little every morning—whether it’s a blog post, research paper, or just browsing forums where fellow troubleshooters share war stories.

And sometimes, the best lessons come from the weirdest places. I remember watching a livestream of folks hacking on vintage video game AI, and suddenly the way they debugged pixel-perfect jumps gave me a fresh perspective on timing issues in my neural nets. So yeah, staying curious means being open to inspiration from unexpected corners.

So, if you’re just starting out, or have been wrestling with AI glitches for years, building this troubleshooting mindset can make all the difference. It’s less about having every answer upfront and more about embracing the messy, sometimes frustrating journey—and finding joy in the process of discovery.

Bonus: How AI Agentic Workflows Are Changing the Way We Work Forever

The Future of AI Workflow Debugging: What’s Coming Next?

You know, debugging AI workflows almost feels like trying to solve a puzzle where the pieces keep shifting. And honestly, it’s been a bit of a headache for many of us. But looking ahead, I’m genuinely excited about some shifts on the horizon that could make this whole process a lot smoother—almost enjoyable, dare I say?

AI-Powered Debugging Tools: Automation for Automation

It might sound a bit meta, but we’re starting to see AI being used to debug other AI processes. Think about that for a second—tools that can automatically detect where your workflow trips up and even suggest fixes. It’s like having a really sharp assistant who never sleeps (and never judges your messy code). I’ve played around with a few of these emerging tools recently, and wow, the time they save is noticeable. Instead of hunting endlessly for a single misconfigured parameter, these tools flag it almost instantly.

Yet, it’s not foolproof—sometimes this automation misses subtle bugs that only a human eye can catch. But honestly, the fact that AI is stepping in to reduce our load feels like we’re inching closer to a sort of debugging utopia.

Improved Error Messaging: Making Errors More Understandable

Raise your hand if you’ve ever stared blankly at a cryptic error message, feeling like the error was speaking its own secret language. Yeah, me too. The good news? Error messages are finally evolving to be more human-friendly.

The future promises clear, contextual messages that don’t just say “Something went wrong,” but actually guide you toward what might be wrong—and how to fix it. Imagine a cold Monday morning, the office only half-lit, your coffee still warm as you see an error message that actually makes sense. It’s a small thing, but trust me—it changes your whole debugging mood.

Bonus: Top Workflow Automation Tools for Business Operations

Standardized Workflow Formats: Enhancing Interoperability

Here’s something I’ve noticed in my own projects: juggling multiple AI tools often meant dealing with incompatible formats. It’s like trying to fit a square peg in a round hole—you know it won’t go smoothly.

But there’s a growing push for standardized formats that allow different AI workflows and platforms to talk the same language. This isn’t just a timesaver; it’s a game-changer for teams working across tools and environments. It means less “export/import” headache and more focus on the actual creative and analytical work. It’s like finally getting all your favorite gadgets to sync seamlessly—the kind of satisfying moment where tech just works together.

The Rise of Explainable AI (XAI): Peeking Inside the Black Box

Now, this one is close to my heart—and a bit tricky. AI has often been this mysterious “black box” where decisions happen without much explanation. That used to give me, and many others, this nagging feeling of unease. How can you trust something if you don’t really know how it arrives at its conclusions?

Explainable AI (XAI) is changing that dynamic. It’s like pulling back the curtain and seeing the gears turn inside, helping us understand why the AI flagged a certain anomaly or made a prediction. In industries where decisions carry heavy weight—say healthcare or finance—this matters deeply.

That transparency builds trust, and for developers, it’s a tool for more precise debugging. Plus, I’ve found it fascinating (and a bit humbling) to peek behind the scenes of these complex models. It makes you appreciate the craftsmanship and logic—and also see their quirks.

So yeah, the future of AI workflow debugging is looking bright, even if a bit complex. It’s a mix of smarter tools, friendlier messages, better collaboration formats, and deeper understanding. If you ask me, these changes won’t just make our jobs easier—they’ll make the whole AI journey more human, which is exactly what we need.