The day before Google’s AI announcements at the I/O Developers Conference, OpenAI made a significant move. On Monday, OpenAI hosted a live Spring Event, revealing updates that had been generating buzz for months. Here’s the key information:

- Introduction of a new GPT-4 variation, named GPT-4o (Omnimodel).

- GPT-4o will be accessible to both free and paid users.

- Paid users will enjoy a 5X higher capacity limit compared to free users.

- The GPT Store, previously exclusive to ChatGPT Plus users, will soon be available to free users.

- GPT-4o integrates transcription, intelligence, and text-to-speech capabilities into one mode.

- A new desktop assistant that can “hear” and “see” what you’re working on.

- GPT-4o will be available through the API at a reduced cost.

- A live view mode that likely enables real-time vision use.

- Reduced latency for a more real-time experience in voice-to-voice communication.

- A more human-like interaction experience.

- Rollout to users is set to begin in the coming weeks.

OpenAI’s latest innovation, GPT-4o, is poised to revolutionize the landscape of artificial intelligence. Announced at OpenAI’s live Spring Event, stands for “omni-modal,” signifying its ability to seamlessly integrate text, audio, and visual content. This cutting-edge model is set to redefine human-computer interaction, offering unprecedented versatility and performance. Here’s a detailed look at what GPT-4o brings to the table and why it’s a game-changer.

Multimodal Mastery

GPT-4o can accept and generate inputs and outputs in any combination of text, audio, and images. This multimodal capability is a significant leap forward, enabling more natural and dynamic interactions.

Imagine being able to converse with an AI that not only understands your words but also processes the tone of your voice, background noises, and even visual cues. This level of interaction is akin to having a conversation with another person, making technology feel more intuitive and human-like.

Lightning-Fast Response

One of the standout features of GPT-4o is its speed. It can respond to audio inputs in as little as 232 milliseconds, with an average response time of 320 milliseconds. This is comparable to human response times in conversation, making interactions with GPT-4o feel fluid and instantaneous. This improvement is a considerable step up from previous models, which had latencies of 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4).

Enhanced Language Performance

GPT-4o matches the performance of GPT-4 Turbo on text and code in English and surpasses it in non-English languages. This makes GPT-4o an excellent tool for global applications, enhancing its usability across different languages and regions. Whether you’re coding or crafting content in multiple languages, offers superior performance and versatility.

Superior Vision and Audio Understanding

In addition to its text capabilities, GPT-4o excels in understanding and generating visual and audio content. It’s particularly adept at interpreting images and audio, offering a level of accuracy that surpasses existing models. This makes GPT-4o ideal for creating rich multimedia experiences, from interactive content to real-time customer support.

Live demo of GPT4-o voice variation

Cost-Effective and Accessible

Despite its advanced capabilities, GPT-4o is 50% cheaper and 2x faster than GPT-4 Turbo in the API. This cost-efficiency makes high-performance AI technology more accessible for various applications, from small businesses to large enterprises. The model is available in both free and paid tiers, with paid users enjoying up to 5x higher message limits.

Model Availability and Roll-Out Strategy

GPT-4o is rolling out iteratively, starting with text and image capabilities in ChatGPT. Both free and Plus users can access GPT-4o, with Plus users benefiting from higher message limits. A new version of Voice Mode with GPT-4o is set to launch in alpha within ChatGPT Plus in the coming weeks. Developers can also access GPT-4o in the API as a text and vision model, with support for its audio and video capabilities coming soon to a select group of trusted partners.

Key Innovations and Features

1. Omnimodal Integration

GPT-4o combines transcription, intelligence, and text-to-speech into a single model, significantly reducing latency and enhancing real-time interaction.

2. Desktop Assistant

The new desktop assistant can “hear” and “see” what you’re working on, providing context-aware assistance that boosts workplace efficiency.

3. Affordable API Access

OpenAI offers GPT-4o at a reduced cost via its API, encouraging broader adoption and integration into various business operations.

4. Live View Mode

Live View mode allows GPT-4o to use vision in real-time, opening new possibilities for interactive and immersive applications.

5. Human-Like Interactions

Reduced latency in voice-to-voice communication and the ability to express conversational emotions make GPT-4o interactions feel more natural and engaging.

6. Redefining Customer Support

With this, real-time customer support can feel natural and intuitive, enhancing user satisfaction and engagement.

7. Enhanced Creativity and Innovation

GPT-4o provides tools that enhance workflows, creativity, and innovation, allowing businesses to leverage advanced AI capabilities seamlessly.

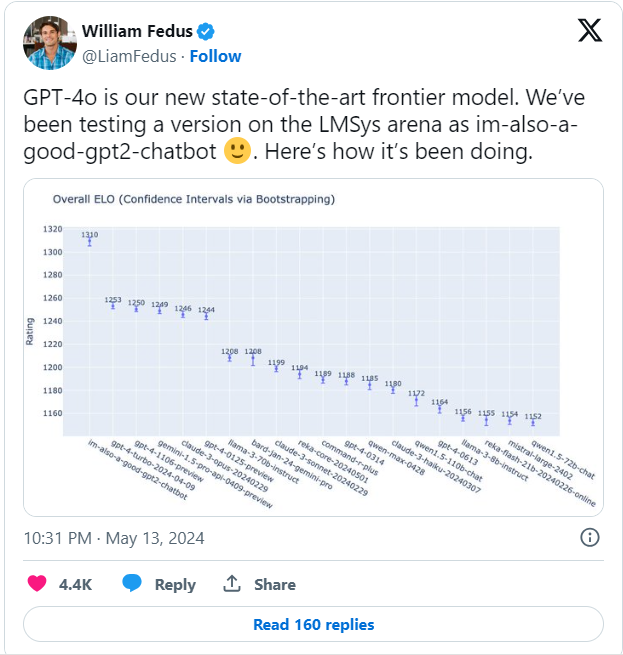

How did Twitter react to GPT 4o release?

FAQ about ChatGPT 4o

What is GPT-4o?

GPT-4o is an evolution of the GPT-4 AI model, currently used in services like OpenAI’s own ChatGPT. The O stands for “omni” — not because it’s omniscient, but because it unifies voice, text, and vision. That contrasts with GPT-4, which is mostly about typed text interactions, exceptions like image generation and text-to-speech transcription notwithstanding.

How and when is GPT-4o going to be available?

The model is coming to all tiers of ChatGPT as of May 13, including free users. There are some catches here — ChatGPT Plus and Team subscribers get five times the amount of prompts, and for everyone, conversations fall back to GPT-3.5 once prompt limits are hit. Also, the new voice functions are initially deploying only to Plus subscribers, and only in an early alpha state sometime before the end of June. We’ll see 4o enterprise features introduced around the same time.

It’s not clear when we’ll see GPT-4o migrate outside of ChatGPT, for example to Microsoft Copilot. But OpenAI is opening the chatbots in the GPT Store to free users, and it would be odd if third parties didn’t leap on technology easily accessible through ChatGPT. The company is being cautious, however — for its voice and video tech, it’s beginning with “a small group of trusted partners,” citing the possibility of abuse.

Is ChatGPT 40 free?

it is available to all ChatGPT users, including on the free plan! so far, GPT-4 class models have only been available to people who pay a monthly subscription. this is important to our mission; we want to put great AI tools in the hands of everyone

Future Prospects

The launch of GPT-4o marks a significant milestone in the AI industry. By making advanced AI technology more accessible and versatile, OpenAI is paving the way for a future where human-computer interaction is seamless and intuitive. As we embrace this wave of AI innovation, businesses of all sizes can harness the power of GPT-4o to transform their workflows, enhance creativity, and drive innovation.

Are you ready to ride the AI wave with GPT-4o? The future of artificial intelligence is here, and it’s more exciting than ever.