When you hear the phrase “AI agents working together,” it probably brings to mind sci-fi robots forming a team. But the truth is, this is very real—and it’s already shaping how we use technology in our daily lives. From AI customer support bots that hand off queries to one another, to warehouse robots coordinating movement, this kind of teamwork is everywhere.

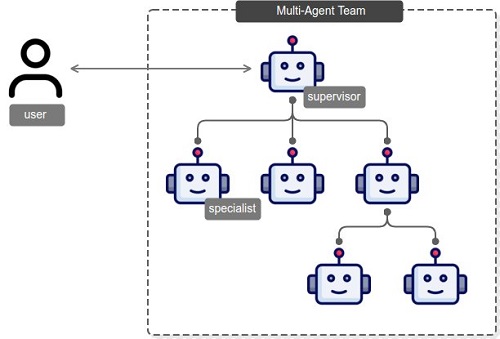

Multi-agent collaboration is when several independent AI agents operate within the same environment, exchanging data, dividing tasks, and making joint decisions. These agents don’t just co-exist—they actively coordinate to complete a process more better than one agent could do alone.

Think of it like a smart team of coworkers, each with their own role, working together to finish a big project—only these coworkers are software-based agents.

So what exactly is going on behind the scenes when AI agents “collaborate”? Let’s explain it down in more better details.

Single-Agent vs. Multi-Agent Systems: Key Differences

To understand why multi-agent collaboration matters, it helps to compare it with its simpler counterpart: single-agent systems.

A single-agent system is exactly what it sounds like—one AI working independently in its environment. Think of a chatbot answering customer queries, or a smart vacuum navigating a room. It gathers information, makes decisions, and acts—all on its own.

But as tasks become more complex—like managing a fleet of delivery drones or coordinating customer service across languages and platforms—one agent isn’t enough. That’s where multi-agent systems come in.

In a multi-agent system (MAS):

- Each agent has its own goals, abilities, and knowledge base.

- Agents communicate with each other to share information.

- They may cooperate, compete, or negotiate to solve problems.

- Decisions are often made collectively, not in isolation.

In short, single-agent AI works well for simple, isolated tasks, while multi-agent AI is better suited for systems that require distributed thinking, coordination, or adaptability.

Why Multi-Agent Systems Matter

The value of multi-agent collaboration isn’t just in making AI smarter—it’s about making complex workflows manageable.

Let’s say you’re running a content automation platform. One agent might write scripts, another edits video, and a third schedules it across social media. Instead of relying on one large system to juggle everything, you’ve got a team of agents, each focused and faster at what they do.

Here’s where it really pays off:

- Scalability: Need to handle more tasks? Just add more agents. No need to rebuild the system.

- Flexibility: Agents can be reprogrammed or swapped out depending on the task.

- Speed: Since they can work in parallel, things get done quicker than a single system tackling one task at a time.

- Resilience: If one agent fails, others can carry on or reroute the task. It’s like having a built-in safety net.

This approach is already used in logistics, robotics, finance, and even AI customer support systems. The collaboration makes them not just efficient, but also adaptable to real-world complexity.

How AI Agents Work Together: Communication & Coordination

You know, when I first dove into how AI agents collaborate. It’s a fascinating dance of messages, protocols, and shared intentions, all structured through AI workflows that guide each agent’s role. These workflows help them pull off complex tasks without stepping on each other’s digital toes.

The Art of AI Communication: Protocols and Languages

So here’s the scoop: AI agents don’t just blurt out messages randomly. They rely on carefully designed communication protocols—sort of like the grammar and vocabulary in human languages—that help them understand and respond to one another clearly. These protocols ensure that when one agent says “Hey, need backup on this task,” the other actually gets what’s going on and can reply appropriately.

These ‘languages’ could be something like FIPA-ACL (Foundation for Intelligent Physical Agents – Agent Communication Language), which is a bit of a mouthful but essentially acts like a translator between agents, encoding requests, promises, queries, and so on. From my experience, the elegance is in simplicity—too complex, and the system gets bogged down; too simple, and agents might misinterpret each other.

Coordination Strategies: Staying on the Same Page

I always think of coordination like trying to plan a weekend trip with friends. You’ve got to decide who packs the snacks, who drives, and who handles the playlist—except AI agents have to do it at lightning speed and without misunderstandings.

There are several approaches:

- Centralized coordination: Imagine one agent acting as the team lead, assigning tasks and monitoring progress.

- Decentralized coordination: Kind of like a potluck where everyone decides what to bring independently but based on an agreed-upon theme.

- Negotiation-based methods: Agents essentially haggle over who does what, balancing workload and resource use.

What’s cool is that this isn’t just theory. In real-world scenarios—think of a fleet of delivery drones collaborating on routes—they use combinations of these strategies to avoid overlap and ensure everything runs smoothly. Honestly, watching those systems in action feels like witnessing an ultra-organized bee hive buzzing with purpose.

Shared Goals and Task Delegation: Dividing and Conquering

One thing that really jumped out at me is how AI agents manage shared goals. It’s not just about communicating; it’s about understanding the big picture and figuring out who is best suited for each piece of the puzzle.

Task delegation here is crucial. Instead of every agent trying to do everything (which would be chaotic—trust me, I’ve tried multitasking and it’s a mess), they divide responsibilities based on their capabilities and priorities.

For instance, in a customer service chatbot setup, one AI might specialize in understanding emotions while another handles data retrieval. They talk, delegate parts of the conversation back and forth, and before you know it, you’re chatting with what feels like a single, very competent assistant.

Actually, this reminds me of when I collaborated on a project with several digital tools; each had its specialty, and only by trusting each other’s roles did the whole process succeed without me tearing my hair out. AI agents do much the same, but at scales and speeds that leave me both awestruck and, well, a tiny bit envious.

Types of Multi-Agent Architectures: Centralized, Decentralized, Hybrid

Alright, so when we dive into multi-agent architectures, it’s kind of like looking at different ways a team can organize itself. You know, like how sometimes a team has one coach who calls all the shots, other times everyone works solo but shares info, and sometimes… well, it’s a bit of both.

Let me walk you through these styles — centralized, decentralized, and hybrid — so you get a clearer picture. I find it helps to think about these architectures like management styles in work or even group projects back in college. And, honestly, it’s fascinating how these concepts overlap with everyday experiences.

1. Centralized Architecture

In this setup, there’s usually one main controller (like a leader or coordinator) that tells other agents what to do. This central agent collects data from all the others, processes it, makes decisions, and then sends instructions back.

Example in Real Life:

Think of an air traffic control tower. The tower monitors all aircraft in its zone and tells each one when to take off, land, or change direction. The planes (agents) follow the instructions rather than deciding on their own.

Pros:

- Clear decision-making path

- Easier to monitor or troubleshoot

Cons:

- If the central agent fails, the whole system might stop

- Can slow down if too many agents are connected

2. Decentralized Architecture

Here, each agent works independently but still collaborates with others. There’s no central boss. Instead, agents communicate with one another directly and make decisions based on local data and peer interactions.

Example in Real Life:

Imagine a colony of ants. No ant is in charge, but they follow simple rules and communicate through signals like scent trails. Together, they build nests, find food, and protect the colony.

Pros:

- More flexible and robust (one agent’s failure doesn’t break the system)

- Better for large-scale systems where central control isn’t practical

Cons:

- Coordination can get tricky

- May take longer to reach agreement

3. Hybrid Architecture

This combines parts of both centralized and decentralized setups. Some agents may be grouped under local coordinators, or the system might switch modes depending on the task.

Example in Real Life:

A ride-sharing app is a good example. There’s a central system matching drivers and riders (centralized), but once matched, the drivers operate independently, navigating and making decisions on their own (decentralized).

Pros:

- Balances control with flexibility

- Can scale up or down depending on the need

Cons:

- More complex to design and maintain

- Needs careful planning to avoid overlap or conflict

So yeah, whether you lean towards centralized, decentralized, or hybrid, it really depends on the problem you’re tackling and the environment you’re in. And honestly, sometimes I find myself rooting for hybrids just because life rarely fits into neat boxes.

Use Cases: Where Multi-Agent AI Is Making Its Mark Today

Multi-agent collaboration in AI isn’t just a lab experiment—it’s already powering real systems in everyday life. Let’s look at some common areas where multiple AI agents work together to handle complex tasks.

Autonomous Vehicles: Orchestrating Traffic Flow

Imagine being in a city where every self-driving car, traffic light, and even emergency vehicle is chatting seamlessly with one another. No, it’s not the plot of a sci-fi movie—it’s a glimpse into what multi-agent AI is accomplishing right now. When these vehicles communicate, they manage traffic flow dynamically, reducing jams and even lowering accidents by anticipating and reacting to each other’s moves.

For instance, in places like Pittsburgh and Phoenix, where autonomous vehicle testing is more advanced, multi-agent systems coordinate to optimize routes and keep vehicles from crowding on the roads. It’s not just about each car driving safely but how they collectively create a more efficient, greener traffic ecosystem. I remember reading about a trial where cars reduced idle time by up to 25%, which—let’s be honest—sounds like a dream on those endlessly clogged mornings.

Smart Warehouses and Logistics: Optimizing Supply Chains

Now here’s a sector that makes you appreciate the magic behind timely deliveries (ever wonder how that late-night Amazon order shows up so fast?). Multi-agent AI in warehouses means robots don’t just operate blindly; they negotiate tasks, share space, and even troubleshoot on the fly.

I visited a distribution center once, and watching those bots zoom around was like seeing an intricate ballet—each one knowing exactly where to go, what to pick, and how to avoid collisions. When you think about the sheer volume—sometimes tens of thousands of products moving daily—coordinating all that manually would be nuts. Multi-agent systems optimize routes, manage restocking schedules, and adapt if a robot breaks down. It’s like they have their own tiny “meeting” to reroute tasks. The result? Faster fulfillment and fewer errors, which, to me, feels like a huge win for both businesses and consumers.

Chatbots with Multi-Task Coordination: Handling Complex Customer Queries

Okay, this one I find pretty cool, especially since many of us have probably gotten frustrated with chatbots that don’t quite get what we need. Multi-agent AI chatbots don’t just handle single questions; they collaborate within themselves to manage complex, layered conversations. One agent might focus on billing, another on technical issues, and they pass the conversation back and forth seamlessly.

I tried a customer service bot recently for a complicated account problem, and honestly, it felt less like talking to a robot and more like chatting with a team—each part bringing in expertise without asking me to repeat myself every time. It’s these systems that can transform frustrating experiences into surprisingly smooth ones. And with companies investing heavily in this, I’d bet we’ll see more chatbots that actually listen and understand soon.

Game AI and Simulations: Creating Realistic and Dynamic Environments

Finally, if you’re like me and have spent way too many hours gaming—or even just watching simulations—you’ve probably noticed how NPCs (non-player characters) are getting smarter. Multi-agent AI allows these characters to coordinate their actions, adapt to player strategies, and even form alliances or rivalries dynamically.

For example, in strategy games like “Civilization” or immersive RPGs, agents represent different factions, each with their own goals and abilities. When they negotiate, compete, or team up, it creates a richer, more unpredictable experience. What’s fascinating is how these systems mirror social behaviors, making game worlds feel less scripted and more alive. I always find myself impressed when an AI opponent surprises me—not just because it’s tough, but because it reacts in ways that feel genuinely human.

So yeah, multi-agent AI isn’t some distant future concept. It’s actively weaving itself into the fabric of how we drive, shop, get support, and even play. And honestly, I can’t wait to see what happens next—because if history’s taught me anything, these systems will only get more clever, more collaborative, and way more integral to our daily lives.

The Real Perks of Collaborative AI Agents

When multiple AI agents work together, they bring more to the table than any single agent could manage alone. Think of it like a team—each member has a role, and when they coordinate well, things get done faster and more accurately. Here’s how that plays out in real-world systems:

Increased Efficiency and Speed: Getting More Done, Faster

Okay, first off—speed. When I think about collaborative AI agents, I imagine this entire assembly line where each AI is specialized, focused on its own little task, yet constantly sharing updates with its pals. This means tasks that would normally take ages get sliced down dramatically.

In fact, this distributed approach reminds me of how I used to organize group projects back in university. Everyone tackled what they were best at, and we finished so much faster than if one person tried to do everything. AI agents doing this continuously, without coffee breaks or naps? The efficiency boost adds up fast.

Improved Accuracy and Reliability: Using Collective Intelligence

Here’s something I find genuinely intriguing—when these agents pool their smarts, the chances for errors dropping through the cracks are way lower. Imagine an AI triple-checking results by comparing notes across different specialized agents. It’s like having three friends proofread your essay instead of just one (which, trust me, can save you from some brutal red marks).

Actually, let me rephrase that—it’s not just about catching errors; it’s about using diverse perspectives to solve problems. Studies have shown that collective intelligence often outperforms individuals, even in AI systems. I guess it’s the old “two heads are better than one” thing, but multiplied.

Enhanced Adaptability and Resilience: Thriving in Dynamic Environments

Now, this is where it gets pretty exciting—or kind of wild. Dynamic environments are messy; variables change, surprising conditions pop up, and a static AI might just freeze up or deliver outdated results.

But collaborative AI? Because it’s made up of various agents, each one can adapt or recalibrate based on real-time feedback from its peers. If one agent fails, others can often step in or adjust their actions. This reduces the risk of system-wide breakdowns. It’s similar to how a team can still keep working even if one member has to step away.

Think of a busy restaurant kitchen during a dinner rush. If one chef faces a sudden shortage of an ingredient, the rest adjust the menu or cooking order on the fly. That kind of resilience makes all the difference, especially when stakes are high or when dealing with fast-moving situations—like real-time traffic management or financial markets.

Honestly, I’m still wrapping my head around how these AIs manage to coordinate so smoothly without some kind of AI version of “Who left the stove on?” moments. It’s a mix of smart design and tons of iterative testing, I imagine.

Challenges in Agent Systems: Navigating Conflicts, Complexity, and Trust

When multiple AI agents work together, they’re like digital teammates. But just like humans in a group project, things don’t always go as planned. Below are the most common hurdles developers face when building systems where AI agents collaborate:

1. Conflicts Between AI Agents

AI agents are built to make decisions based on their individual goals and observations. But what if their actions interfere with each other?

- Two agents might try to complete the same task at once

- One agent may interrupt another’s workflow

- Conflicting decisions can slow down or block progress

In a smart logistics system, for example, two AI agents might assign the same truck for different deliveries. If there’s no coordination logic, that overlap leads to delays.

Solution? Developers use conflict resolution strategies like shared rules, role assignments, or real-time negotiation between agents.

2. Scalability Issues

Adding more AI agents to a system increases its ability to handle complex work—but it also makes things harder to manage.

- More agents = more data, decisions, and communication

- The system can slow down if every agent has to talk to every other one

- Resource management (like memory, power, bandwidth) becomes tougher

This is especially noticeable in large-scale networks like smart grids or autonomous fleets, where hundreds of AI agents operate simultaneously.

What’s the fix? Architectures are designed to group or cluster agents, limit communication scope, or allow them to work more independently.

3. Building Trust Between Agents

In collaborative systems, agents rely on one another’s input to act. But what if an agent makes a poor decision—or worse, feeds incorrect data?

- Trust becomes essential for coordination

- Malfunctioning or compromised agents can corrupt outcomes

- Errors in one agent can ripple through the system

In AI-driven finance systems, one misinformed agent could trigger faulty trades if others take its data at face value.

How it’s handled: Systems often include validation checks, shared state awareness, or peer-review processes to confirm data accuracy before agents act on it.

4. Communication Overload

AI agents often need to share updates, intentions, or decisions with others. But too much communication can backfire.

- High messaging frequency can overload networks

- Too many updates can delay decision-making

- Agents may become dependent on each other’s input, slowing reactions

To keep systems running smoothly, designers create rules for when and how often agents should talk—and sometimes use local decision-making to cut down on chatter.

5. Design Complexity

Designing a working multi-agent system is a serious challenge. Every agent must:

- Know its role

- Understand how to interact with others

- Follow system-wide coordination rules

It’s even more complicated when agents come from different models, vendors, or programming frameworks.

To deal with this, developers rely on standard protocols and modular designs so agents can work together regardless of how they were originally built.

These challenges don’t make multi-agent AI impossible—they just require more thoughtful design, communication rules, and error-handling. The more complex the system, the more attention these details need.

Future of Multi-Agent Collaboration: What’s Next?

The future of AI won’t be shaped by single intelligent agents acting alone—it’s going to be about systems of agents working together, constantly sharing data, learning, and improving as a team. Multi-agent collaboration is already evolving fast, but what’s ahead is even more promising—and more complex.

Let’s break down what’s realistically coming next:

1. AI Agents That Learn Together, Not Just Alone

Right now, many AI agents are trained independently. But in the future, we’ll see groups of agents learning collaboratively in real-time, using techniques like multi-agent reinforcement learning (MARL).

What does this mean?

Instead of one agent figuring out the best move in a scenario, multiple agents will learn through trial-and-error together, updating their strategies based on what others do—like teammates in a game adjusting to each other’s plays.

2. Decentralized Intelligence at Scale

The current trend is toward decentralized multi-agent systems—meaning there’s no single control hub. Each agent acts locally but shares updates with others in its network.

Think:

- Swarms of drones mapping disaster zones independently

- Self-driving cars adjusting speed or routes without central coordination

- IoT devices in a smart grid balancing energy loads together

3. Cross-Platform AI Collaboration (Inter-Agent Protocols)

Right now, agents mostly talk within closed ecosystems. But soon, we’ll see standardized communication frameworks—like APIs for agents—so they can work across platforms, brands, and ecosystems.

Example:

A smart home assistant might communicate with your electric car and a public charging station to book a charge slot—all in real time, even if they’re from different companies.

4. Context-Aware Agent Teams

The next step isn’t just smarter agents—it’s agents that understand context and roles within a team.

For instance, in a customer service scenario:

- One AI agent might handle emotional tone

- Another pulls up account info

- A third drafts a resolution

And they do this while understanding how their parts affect the full interaction.

This applies to:

- Virtual health assistants supporting patients

- Teaching bots that adjust content delivery together

- AI game characters that act like a coordinated team

5. Human-AI Teamwork Gets Smarter

Human-AI collaboration is going to be more natural. Agents will better recognize:

- When to ask for human input

- How to explain their decisions

- When to step back and let a person lead

This could show up in:

- Emergency response systems (AI triages, humans act)

- Design tools where AI agents suggest, but humans decide

- Legal or financial research tools that pre-scan documents collaboratively

6. Ethics and Trust Become Built-In Features

As collaboration increases, so does the risk: conflicting decisions, data leaks, bias, or agents “gaming” the system.

Future multi-agent systems will need:

- Built-in auditing: Logs of who did what and why

- Fail-safe mechanisms: If an agent acts out of bounds, others can intervene

- Clear role boundaries: So agents don’t override human input or conflict with each other

Governments and global research communities are already proposing agent governance protocols to keep these systems fair, accountable, and safe.

7. Developer Access Grows

Finally, building multi-agent systems used to be reserved for researchers. That’s changing.

In the near future:

- Cloud-based simulation environments will make it easier to test how agents interact in complex settings

- Open-source frameworks (like Microsoft’s Project Bonsai, Google’s SEED RL, or OpenAI’s collaborative models) will allow more experimentation

- Low-code platforms will let businesses deploy agent teams without needing a PhD in AI

Multi-Agent Collaboration: The New Frontier of AI Problem-Solving

Multi-agent collaboration is chnaging how complex problems are approached, offering solutions that are more efficient, adaptable, and intelligent than ever before. By understanding the core principles and real-world applications of collaborative AI, you can appreciate its immense potential. From autonomous vehicles managing traffic to smart warehouses optimizing supply chains, the possibilities are vast.

Frequently Asked Questions on Multi-Agents Collaboration

1. What is a multi-agent system in AI?

A multi-agent system (MAS) consists of multiple AI agents that interact and share an environment to pursue a common goal. These agents coordinate actions and exchange information to solve tasks more effectively than running alone.

2. Why use multiple agents instead of one?

Many developers note, “Using a single AI to handle everything is like writing code without function calls.” Specialized agents reduce complexity, improve debugging, and help avoid hallucinations.

3. How do agents communicate in a multi-agent system?

Agents usually share data via message queues or shared context. One developer shared: “Managing complexity … involves using an orchestrator … Role-based and task‑based orchestration methods … Communication protocols are crucial.”

4. Can AI agents learn from each other?

Yes. Recent research using frameworks like AutoGen demonstrates that multiple agents can discuss, critique, and learn collaboratively to solve problems more reliably than a single model.

5. What challenges do real-world developers face with agent systems?

Reddit users frequently highlight testing and orchestration issues: “Managing dynamic multi-agent flows becomes very hard … if you don’t get your message‑exchange protocol right, your system will fail to scale.”

6. When should I consider using multiple agents?

Users say multi-agent setups shine when handling complex tasks like planning, research, or coding—even within one app. It reduces token consumption and improves performance by dedicating specialized agents for subtasks.

7. Are multi-agent systems future-proof?

Absolutely. Companies like Accenture and Salesforce are preparing for agent-to-agent (A2A) standards to let independent agents collaborate across platforms. This approach supports greater scalability and autonomy.